Mistake 13: Not parallelizing

Not parallelizing your code is not critical in the sense that it will not affect your results. However, I am including it here because it is a mistake not to save time (since time is valuable) when you have the resources (CPU cores) to run things faster. Having multiple CPUs/cores in your computer will not automatically make your code run faster. You need to explicitly parallelize it. Making parallel programs is not an easy task (and not every algorithm can be parallelized). Fortunately, many scikit-learn models have been parallelized and it is as easy as setting one parameter to take full advantage of this feature! This parameter is n_jobs. By default, n_jobs=None in which case it will only create one job when calling fit() and predict(). Setting it to \(-1\) will use all the available processors/cores in your computer. However, I do not recommend using all your cores but leaving some of them free to work on other processes (operating system, browser, background applications, etc.), otherwise your computer may slow down. For example, my CPU has \(20\) cores. So I set n_jobs=15 to leave the remaining \(5\) cores to execute other processes.

The following example uses the CALIFORNIA-HOUSING dataset which has \(20640\) instances and \(8\) features. The target value is the median house value, thus, we will train a regression Random Forest.

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

import time

df = fetch_california_housing()

X_train, X_test, y_train, y_test = train_test_split(df.data,

df.target,

test_size = 0.2,

random_state = 123)

rf = RandomForestRegressor(random_state = 123)

start = time.time()

rf.fit(X_train, y_train)

y_pred = rf.predict(X_test)

end = time.time()

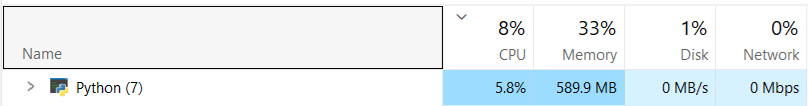

print(f"MAE={mean_absolute_error(y_test, y_pred):.3f}" f" time (seconds) {end-start:.2f}")After splitting the data, I am training a RandomForestRegressor() and making predictions on the test set. Then, the mean absolute error (MAE) is computed. Finally, the MAE and the time it took to train the model and make the predictions is printed. Since this dataset is fairly large, it took \(26.49\) seconds in my computer. By looking at the Windows task manager (Figure 13.1 you can see that the Python program was using around \(5.8\%\) of the CPU and only \(8\%\) was used overall. Now let’s see if we can do better than that.

Figure 13.1: CPU usage with one job.

In the following code snippet, I am setting the n_jobs parameter to \(15\) when creating the RandomForestRegressor() object. My computer has \(20\) cores, so I am leaving \(5\) free cores for other tasks.

rf = RandomForestRegressor(random_state = 123, n_jobs = 15)

start = time.time()

rf.fit(X_train, y_train)

y_pred = rf.predict(X_test)

end = time.time()

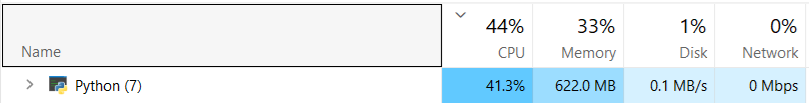

print(f"MAE={mean_absolute_error(y_test, y_pred):.3f}" f" time (seconds) {end-start:.2f}")From the results, we can see that even though the performance was the same (\(0.324\)) it only took \(3.98\) seconds to complete! Figure 13.2 shows my task manager.

Figure 13.2: CPU usage with \(15\) jobs.

This time Python was using \(41.3\%\) of the CPU by taking advantage of its cores.

scikit-learn have the n_jobs parameter which referes to the number of jobs to run in parallel.